Have you ever imagined that OpenAI’s incredibly powerful language models could move beyond the exclusive domain of cloud giants or super data centers, and be deployed directly on your enterprise or development team’s local hardware? Now, this dream has come true! With the official release of the open-source large models GPT-OSS 120B and 20B by OpenAI, the Advantech AIR-520 edge AI server is among the first to enable on-premises deployment, making AI applications more accessible than ever. Want to know how this is achieved? Join us as we unveil the mysteries behind the technology!

What is the GPT-OSS Series? A One-Minute Guide to the Latest Open-Source AI Brain #

GPT-OSS 120B and 20B are OpenAI’s latest open-source language models for 2025, marking the first time since GPT-2 that “model weights” are open to the public! The open license (Apache 2.0) means you are free to use, redistribute, and even commercialize the models, while enjoying inference performance on par with GPT-4o.

Specification Overview #

| Specification | GPT-OSS 20B | GPT-OSS 120B |

|---|---|---|

| Total Parameters | ~2.1 billion | ~11.7 billion |

| Active Parameters (MoE) | ~3.6B / token | ~5.1B / token |

| Context Length | 128,000 tokens | 128,000 tokens |

| Architecture | Transformer + MoE + Sparse Attention | Transformer + MoE + Sparse Attention |

| Open Source License | Apache 2.0 | Apache 2.0 |

| Performance Benchmark | Approaching o3-mini | Near o4-mini level |

| Memory Requirements | ~16 GB | ~80 GB GPU |

Technical Highlights #

- Transformer + Mixture-of-Experts (MoE): Only a subset of experts is activated per inference, greatly reducing resource consumption.

- Sparse Attention Mechanism: Focuses only on meaningful information, lowering memory usage.

- Grouped Query Attention (GQA): Higher inference efficiency and faster speeds.

- 128K Ultra-long Context: Capable of processing extensive data at once.

- 4-bit Quantization: Further lowers inference costs!

These innovations mean large models are no longer confined to the cloud—they can now run smoothly on consumer-grade or edge hardware!

Advantech AIR-520: The AI Platform that Brings Large Models Down to Earth #

AIR-520 is Advantech’s industrial-grade 4U Edge AI Server, purpose-built for edge AI applications. It features an AMD EPYC 7003 series processor, up to 64 cores, 768GB DRAM, and supports up to four PCIe x16 GPU expansions. In our setup, four NVIDIA RTX 4000 Ada cards (totaling 80GB VRAM) were sufficient to run the GPT-OSS 120B large model—no need for costly cloud resources, making AI deployment more flexible and economical.

Deployment Methods Revealed #

- GPT-OSS 20B: Can run on a single RTX Ada 4000 (20GB VRAM), ideal for edge devices, small businesses, or individual developers.

- GPT-OSS 120B: Utilizes four RTX Ada 4000 cards in parallel (tensor parallelism) to maximize the advantages of the MoE architecture.

- 4-bit Quantization Support: Further compresses memory requirements, ensuring large models are no longer limited by hardware constraints.

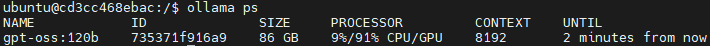

Test note: GPT-OSS 120B requires approximately 86GB of memory with 4 RTX Ada 4000 cards, with some parameters offloaded to the CPU, and still runs stably! For optimal performance, use 2 RTX Ada 6000 cards (96GB).

Experimental Record: AIR-520 Exceeds Expectations in Real-World Performance #

We deployed 4 RTX Ada 4000 cards on the AIR-520 platform using the GPT-OSS model and measured the following inference speeds:

| Model | tokens/s | Suitable Scenarios |

|---|---|---|

| GPT-OSS 20B | ~49 | Smooth conversations, document summarization, code analysis |

| GPT-OSS 120B | ~13.8 | Deep reasoning, text-to-image prompt generation, etc. |

Key Findings:

The MoE architecture ensures that only about 5.1B parameters are activated during inference for the 120B model, significantly reducing computational load. Even without top-tier cloud hardware, the AIR-520 can stably run large models, which means AI is no longer exclusive to large enterprises—everyone can enjoy cutting-edge technology!

Five Key Advantages of Local Deployment #

By deploying GPT-OSS on the AIR-520, you benefit from:

- Data Security: All inference is processed locally, safeguarding enterprise data privacy.

- Low Latency: Real-time interaction eliminates API call bottlenecks.

- High Customizability: Supports fine-tuning, self-training, and multilingual configurations.

- Cloud Cost Savings: Avoids high API usage fees.

- Offline Operation: Not restricted by network availability, suitable for diverse deployment environments.

Breakthrough Highlights: Large AI Models Are No Longer Out of Reach! #

GPT-OSS 20B can run on edge devices with just 16GB of memory. Combined with the industrial-grade stability and scalability of AIR-520, small and medium enterprises, local developers, and even large organizations can now effortlessly own their own AI brain.

Quick Guide to Application Scenarios #

GPT-OSS 20B (Lightweight and Efficient)

- Edge computing devices

- Local development/rapid iteration

- Cost-sensitive SMEs

- Personal PC/workstation deployment

GPT-OSS 120B (Enterprise-Grade Powerhouse)

- Intelligent agent systems

- Complex reasoning/decision support

- Industry 4.0/smart manufacturing

- Fintech/risk analysis

Target Users

- Data-sovereignty-sensitive sectors: healthcare, finance, government, defense

- Factory automation, smart cities, IoT

- Highly customized intelligent assistant needs

- AI startups, research institutes, technical teams

Advantech Continues to Innovate: The New Era of AI Applications Has Arrived! #

This technological breakthrough means that high-quality large language model inference is no longer the exclusive privilege of cloud giants. The Advantech AIR-520 platform showcases our continuous innovation and R&D capabilities in the field of edge AI computing, bringing AI truly “down to earth” across all industries. In line with the open-source spirit of OpenAI GPT-OSS, whether you’re an enterprise, research institute, or developer, you can easily deploy, customize, and expand your own intelligent applications.

AI applications are shifting from centralized cloud services to distributed edge intelligence. Advantech AIR-520 and GPT-OSS are the perfect partners in this revolution, ushering in a new era of private AI capabilities for enterprises!

Want to learn more? Contact us and let’s build your intelligent AI future together!