Why Is Choosing the Right LLM Model So Important? #

Imagine this: you have a team of super-powered AI assistants, each with unique skills, but to maximize their effectiveness, the key is—did you pick the right people? In the rapidly evolving era of AI Large Language Models (LLMs), selecting a model that best fits your tasks is as crucial as choosing the right assistant! In this guide, we’ll take you from scratch to unlock the secrets of “how to wisely choose an LLM fine-tuning model,” helping you easily strike a balance between resources, performance, language, and applications!

Due to the rapid evolution of AI models, this guide is based on the latest information from July 2025. We recommend regularly checking the most recent model developments.

What Is an LLM? In What Scenarios Is It Used? #

LLMs (Large Language Models) act as versatile assistants capable of auto-generating text, answering questions, supporting customer service, analyzing sentiment, and even helping with programming. With the boom in digital transformation, AI-powered customer support, and smart manufacturing, LLMs have become essential tools for enterprises to boost efficiency and gain a competitive edge.

But with so many models on the market, how do you choose? The answer lies in this article!

Step 1: Task Requirements Determine the Level of Assistant #

Do You Need a “PhD-level” or “High School-level” Assistant? #

-

High-Difficulty Tasks (PhD-level):

- Examples: Complex code generation, advanced knowledge Q&A, logical reasoning

- Recommended models: Llama-3.1-405B-Instruct (the most powerful, but requires top hardware!), Llama-3.3-70B-Instruct, DeepSeek-R1-Distill-Llama-70B, Qwen2.5-72B-Instruct

-

General Tasks (College-level):

- Examples: Text generation, basic customer support, sentiment analysis

- Recommended models: Llama-3.1-8B-Instruct, Mixtral-8x7B-Instruct-v0.1, Mistral-7B-Instruct-v0.3, Gemma 3-9B, Gemma-3-12b-it, Qwen3-14B

-

Lightweight Tasks (High School-level):

- Examples: Ultimate speed, limited resources

- Recommended models: Gemma-3-1b-it, Gemma-3-4b-it, Qwen3-0.6B, Phi-3.5-mini-instruct, DeepSeek-R1-Distill-Qwen-1.5B

Step 2: Model Families—Each With Unique Strengths #

Imagine models as different brands of tools, each with its own strengths:

- Google Gemma 3 Series: Strong multilingual support, excellent reasoning, open-source, and diverse options—an all-round rising star.

- Meta Llama Series: Versatile, vibrant community, and widely applied.

- Deepseek Series: Experts in code and math, especially suited for technical applications.

- Mistral Series: Balances efficiency and performance; Mixtral 8x7B is highly acclaimed.

- Microsoft Phi Series: Compact and powerful, the top choice for lightweight tasks.

- Qwen Series: Exceptional Chinese language understanding, ideal for Mandarin markets.

- IBM Granite Series: Premier choice for enterprise-grade applications.

Tip: If you have concerns about models developed in Mainland China, the Gemma 3 series is currently the most reliable choice, with Gemma 3-27B standing out in particular!

Step 3: Hardware Resources Determine Your Freedom of Choice #

- Ample Resources (Multiple High-end GPUs): You can confidently choose large models (e.g., Llama-3.1-405B-Instruct).

- Moderate Resources (Single or Few Mid/High-end GPUs): Medium-sized models are recommended (e.g., Gemma-3-12B, Mixtral-8x7B).

- Limited Resources (Entry-level GPU or CPU only): Small models are the most practical (e.g., Gemma-3-1b-it, Phi-3-mini-4k-instruct), and you may consider efficient fine-tuning techniques like LoRA.

Step 4: Language & Localization—You Control Your Vocabulary #

- Chinese Domain: Qwen series (especially suited for Mandarin markets)

- General Needs: Llama, Mistral, and Gemma series are all great

- Taiwan Localization: Llama-3-Taiwan-70B-Instruct

- Security Considerations: Gemma 3-27B is an excellent alternative for Chinese tasks

Step 5: Commercial Licensing—Don’t Forget Compliance! #

Planning for commercial use? Be sure to check the model’s licensing terms to ensure legal compliance and a worry-free launch for your application!

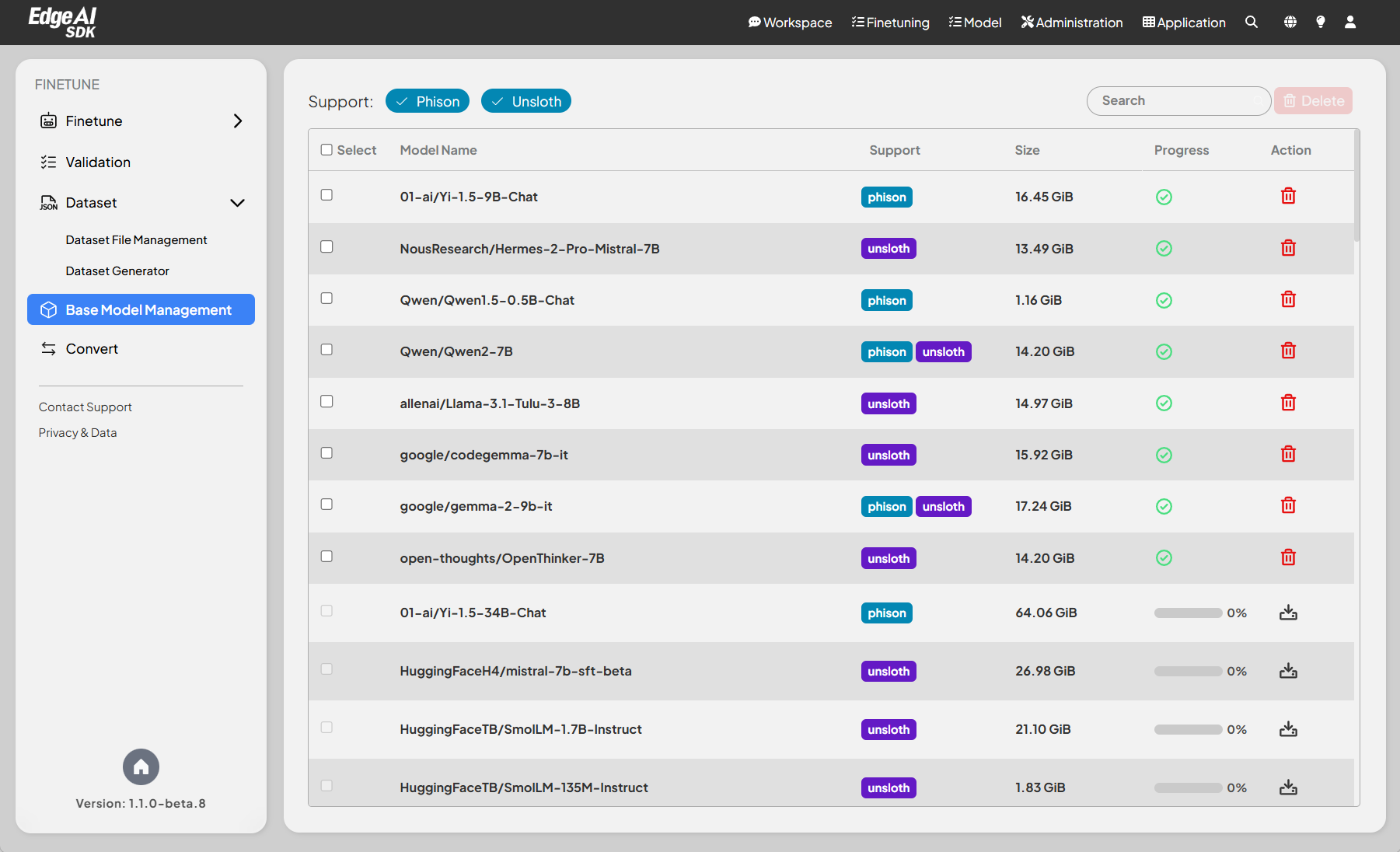

GenAI Studio—Effortless Model Deployment #

Advantech’s GenAI Studio makes model deployment a breeze, with two easy options:

Online Download (Recommended!) #

- Simple Operation: Connect to the internet, register with Hugging Face, obtain an Access Token, and download directly in the GenAI Studio interface with one click!

- License Reminder: For models requiring extra licensing, the system will automatically prompt you to accept the terms.

Offline Manual Deployment #

-

Maximum Security: Ideal for offline or internal proprietary model needs

-

Simple Steps:

- Download the model (including config files, weights, tokenizer, etc.) when online

- Package the files and transfer to GenAI Studio via USB or internal network

- Place them in the designated directory (e.g.:

/home/adv/Advantech/GenAI-Studio/data/server/models/pre-trained) - Enter GenAI Studio and rescan—the system will automatically load the new model

-

Reference Directory Structure:

/home/adv/Advantech/GenAI-Studio/data/server/models/pre-trained ├── Llama-3.2-1B-Instruct ├── Mistral-Nemo-Instruct-2407 ├── Phi-3-medium-4k-instruct ├── Qwen1.5-0.5B-Chat └── Qwen1.5-4B-Chat

Get the Latest Supported Model List #

Want to know which models GenAI Studio currently supports? Check out the official documentation! Reminder: Whenever possible, choose the latest version of a given model type, as newer versions are generally smarter and more stable.

Real-World Scenario: How to Choose for Chinese Customer Service Q&A with Limited Resources? #

Scenario:

You want to use limited hardware resources to deploy a model that can handle Traditional Chinese customer service Q&A with reasonable logical reasoning ability. What should you choose?

Recommended Order:

- Gemma 3-27B: Best overall performance; if you have concerns about Mainland China models, this is your top pick!

- Qwen3-14B / Qwen3-8B: Strong Chinese understanding, balanced performance and resource use.

- Gemma 3-9B: The perfect balance between performance and resource requirements.

- Mixtral-8x7B-Instruct-v0.1: Excellent reasoning and high efficiency.

- Llama-3.1-8B-Instruct: Great general capabilities, decent Chinese support.

- Llama-3-Taiwan-70B-Instruct: Top choice for Taiwan localization (but requires more resources).

When resources are extremely limited:

- Phi-3-mini-4k-instruct or Qwen3-1.7B: Compact yet practical!

Advantech’s Ongoing Innovation Leads the Future of AI Fine-tuning #

At Advantech, we firmly believe that continuous innovation and proactive R&D are key to staying ahead in the AI wave. Whether you are a technical expert, sales partner, or an AI enthusiast, choosing the right LLM fine-tuning model is your first step to doubling your productivity!

Quick Takeaways:

- Clarify your task requirements and resource constraints first

- Choose the most suitable model family based on language and application scenarios

- Don’t forget to check commercial licensing to ensure compliance

- Leverage GenAI Studio for flexible deployment and get your AI assistant online with ease!

The AI landscape is evolving rapidly, and Advantech will continue to bring you the latest and most practical technology updates. Be a part of the next breakthrough!